An objective of this research is to demonstrate a means to automatically classify an image of an artifact using computer vision. I am using a method and code from Dattaraj Rao’s book Keras to Kubernetes: The Journey of a Machine Learning Model to Production. In Chapter 5 he demonstrates the use of machine learning to classify logo images of Pepsi and Coca Cola. I have used his code in an attempt to classify coin images of George VI and Elizabeth II.

Code for this is here: https://github.com/jeffblackadar/image_work/blob/master/Keras_to_Kubernetes_Rao.ipynb

The images I am using are here.

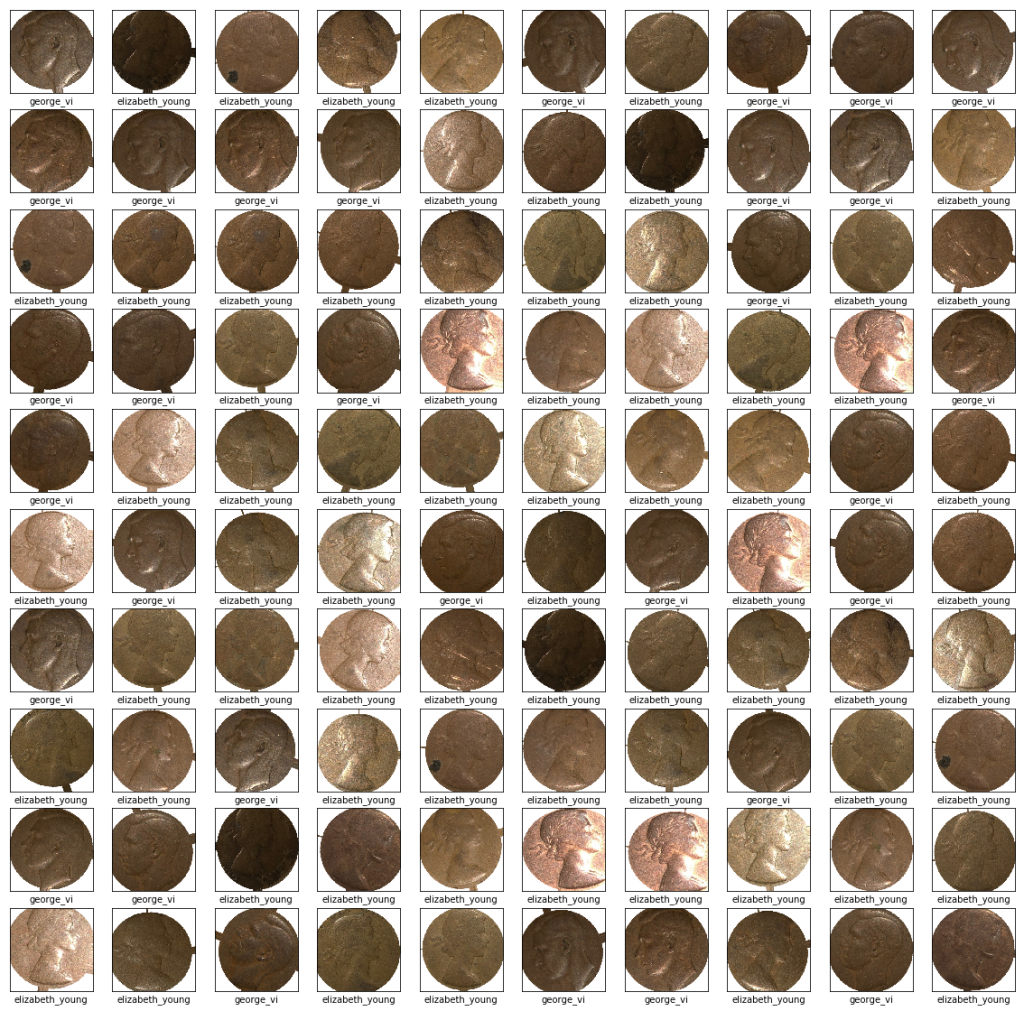

Below are my initial results; the prediction is shown below the image.

…So far so good…

[1 – footnote]

As noted above, this prediction failed.

I am not sure why yet, but here is my experience so far. On my first run through, the prediction failed for the first image of George VI too. I got the correct result when I used a larger image size for training and validation.

train_generator = train_datagen.flow_from_directory(

training_dir,

target_size=(300, 300),

(above) The original code uses an image size of 150 x 150 so I doubled it in each line of the program where that size is used. I may need to use a larger size than 300 x 300.

The colours of my coin images are somewhat uniform, while Rao’s example uses Coke’s red and white logo versus Pepsi’s logo with blue in it. Does color play a more significant role in image classification using Keras than I thought? I will look at what is happening during model training to see if I can address this issue.

Data Augmentation

I have a small number of coin images yet effective training of an image recognition model requires numerous different images. Rao uses the technique of data augmentation to manipulate a small set of images into a larger set of images that can be used for training by distorting them. This can be particularly useful when training a model to recognize images taken by cameras from different angles as would happen in outdoor photography. A portion of Rao’s code is below. Given the coin images I am using are photographed from above, I have reduced the level of distortion (shear, zoom, width and height shift.)

#From:

# Keras to Kubernetes: The Journey of a Machine Learning Model to Production

# Dattaraj Jagdish Rao

# Pages 152-153

from keras.preprocessing.image import ImageDataGenerator

import matplotlib.pyplot as plt

%matplotlib inline

training_dir = "/content/drive/My Drive/coin-image-processor/portraits/train"

validation_dir = "/content/drive/My Drive/coin-image-processor/portraits/validation"

gen_batch_size = 1

This is meant to train the model for images taken at different angles. I am going to assume pictures of coins are from directly above, so there is little variation

train_datagen = ImageDataGenerator(

rescale=1./255,

shear_range=0.05,

zoom_range=0.05,

fill_mode = "nearest",

width_shift_range=0.05,

height_shift_range=0.05,

rotation_range=20,

horizontal_flip=False)

train_generator = train_datagen.flow_from_directory(

training_dir,

target_size=(300, 300),

batch_size=32,

class_mode='binary')

class_names = ['elizabeth_young','george_vi']

print ("generating images")

ROW = 10

plt.figure(figsize=(20,20))

for i in range(ROW*ROW):

plt.subplot(ROW,ROW,i+1)

plt.xticks([])

next_set = train_generator.next()

plt.imshow(next_set[0][0])

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.xlabel(class_names[int(next_set[1][0])])

plt.show()

My next steps to improve the results I am getting are looking at what is happening as the models are trained and training the models longer using larger image sizes.

References

Rao, Dattaraj. Keras to Kubernetes: The Journey of a Machine Learning Model to Production. 2019.

[1 – footnote] This test image is from a Google search. The original image is from: https://www.cdncoin.com/1937-1964-60-Coin-Set-in-Case-p/20160428003.htm