This is a note about replicating the work of Drs. Arnau Garcia‐Molsosa, Hector A. Orengo, Dan Lawrence, Graham Philip, Kristen Hopper and Cameron A. Petrie in their article Potential of deep learning segmentation for the extraction of archaeological features from historical map series. [1]

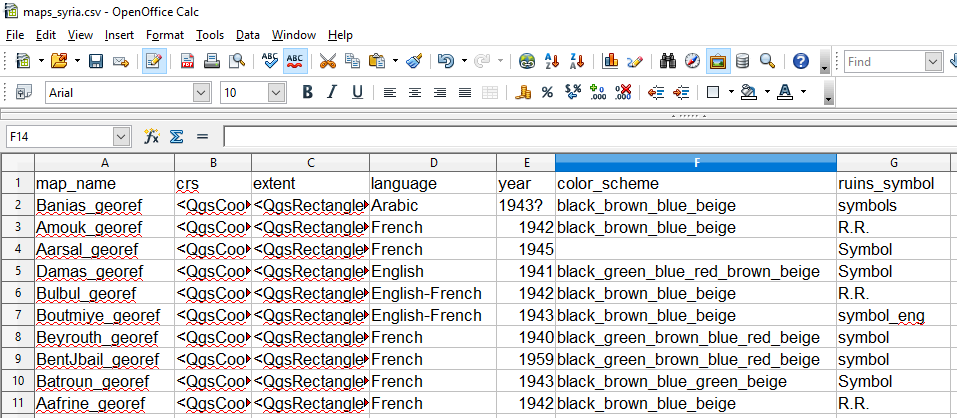

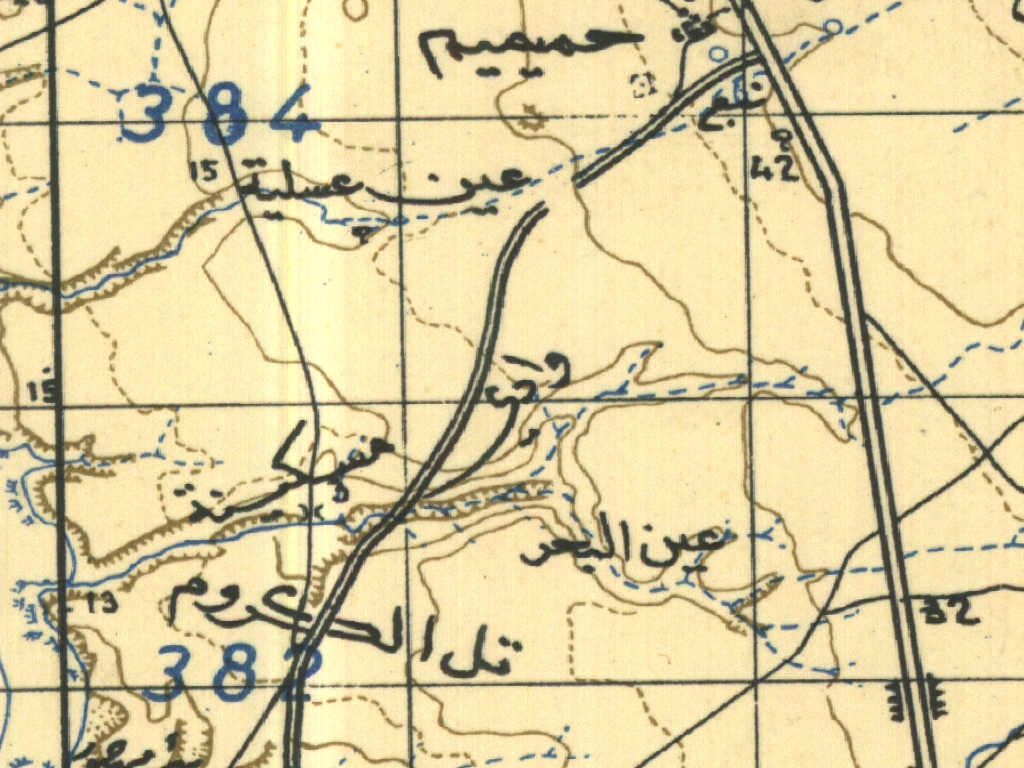

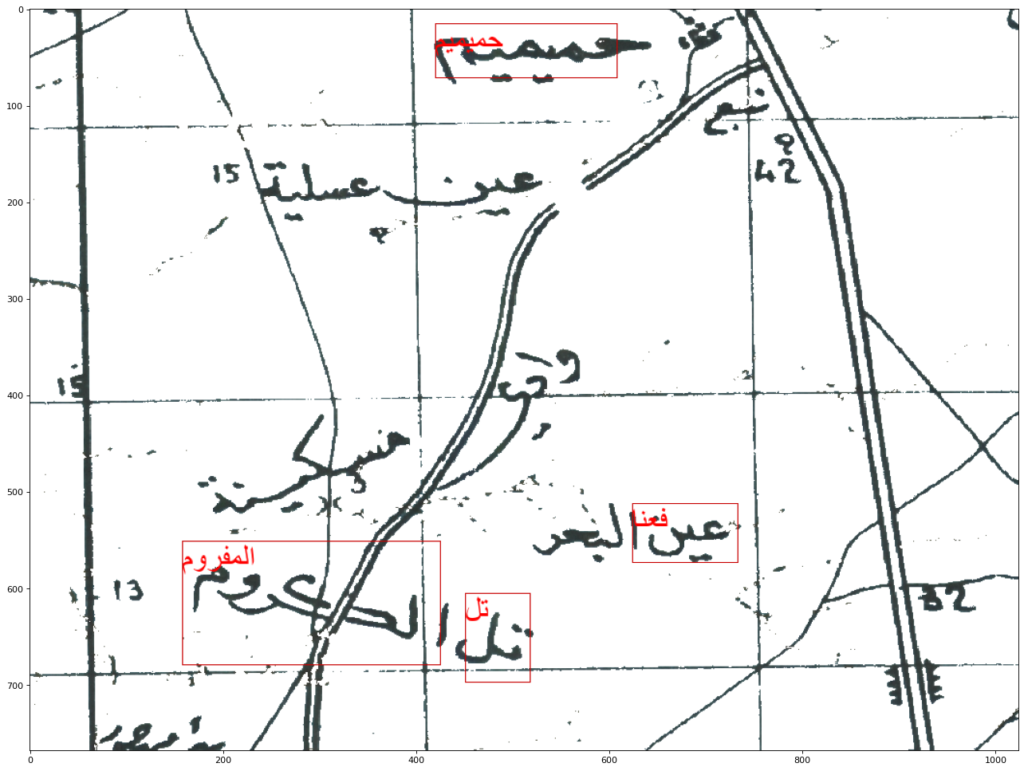

The authors demonstrate a working process to recognize map text. Their “Detector 3” targets the “word ‘Tell’ in Latin characters.” [1] As they note, “‘tell’ (Arabic for settlement mound) may appear as the name of a mound feature or a toponym in the absence of an obvious topographic feature. This convention may be due to the placement of the names on the map, or a real difference in the location of the named village and the tell site, or because the tell has been destroyed in advance of the mapping of the region.” [1]

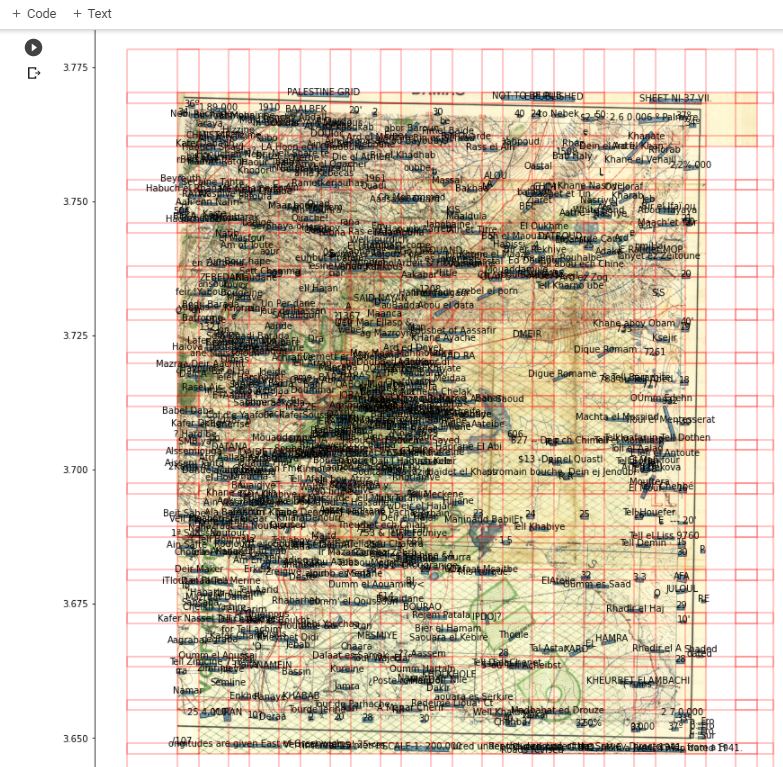

I worked with a georeferenced map provided by Dr Kristen Hopper, Dr. Dan Lawrence and Dr. Hector Orengo: 1:200,000 Levant. Sheet NI-37-VII., Damas. 1941. The map uses Latin characters and so I used Azure Cognitive Services to transcribe the text. Below is part of the map showing “R.R.”, signifying Roman Ruins, and “Digue Romaine”, Roman dike.

This map contains a lot of text:

After transcription I applied a crude filter to remove numbers and retain places that contained lowercase text romam, roman, romai, digue, ell, r.r. Below is a sample of what was retained:

keep i : 44 Tell Hizime

keep i : 56 Nell Sbate te

keep i : 169 Well Jourdich

keep i : 182 Tell abou ech Chine

keep i : 190 ell Hajan

keep i : 221 Tell Kharno ube

keep i : 240 Deir Mar Ellaso &

keep i : 263 Dique Romam

keep i : 303 Tell Arran

keep i : 308 Digue Romame

keep i : 313 & Tell Bararhite

keep i : 314 788 ou Tell Abed

keep i : 350 R.R.

keep i : 379 Telklaafar in Tell Dothen

keep i : 388 Tell Afair

keep i : 408 Tell el Assouad

keep i : 428 ETell el Antoute

keep i : 430 AiTell Dekova

keep i : 433 Tell' Chehbé

keep i : 436 R.R.

keep i : 437 ptromain bouche, Dein ej Jenoubi

keep i : 438 R.R.

keep i : 439 Tell el Moralla!?

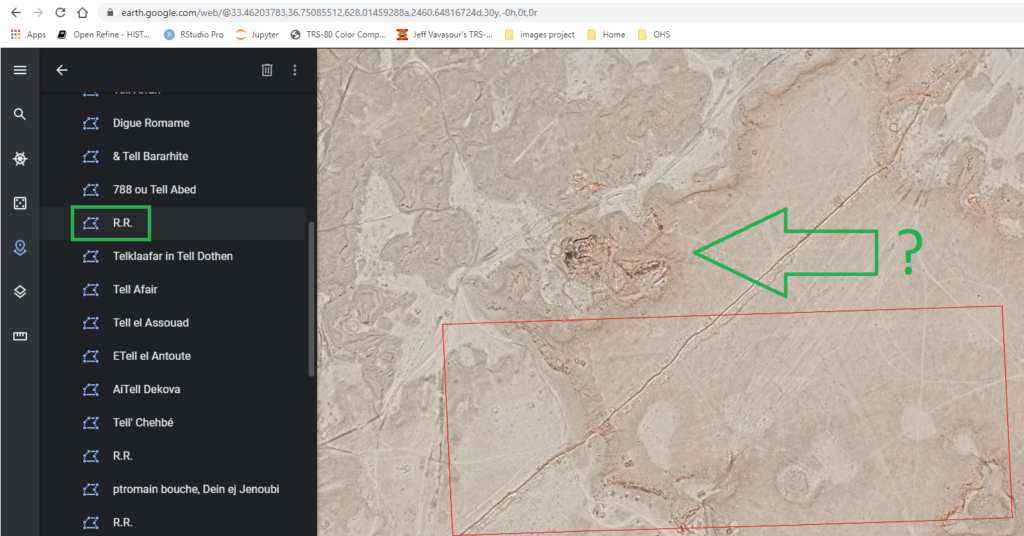

... etc.Below is a screenshot of georeferenced place names displayed on a satellite image in QGIS:

The polygons containing place names are in a shapefile and kml. These locations on the map that possibly represent tells and Roman ruins. The kml can be downloaded and added to Google Earth as a Project, as shown below. This allows an examination of the landscape near each label as seen from a satellite. I have not examined these sites and I don’t have the expertise or evidence to determine whether these are archeological sites. This is meant as a proof of technology to find areas of interest. If you’d like a copy of the kml or shapefile, feel free to contact me on Twitter @jeffblackadar.

The code I used is here.

Thank you to Drs. Kristen Hopper, Dan Lawrence and Hector Orengo for providing these georeferenced maps.

[1] Garcia-Molsosa A, Orengo HA, Lawrence D, Philip G, Hopper K, Petrie CA. Potential of deep learning segmentation for the extraction of archaeological features from historical map series. Archaeological Prospection. 2021;1–13. https://doi.org/10.1002/arp.1807