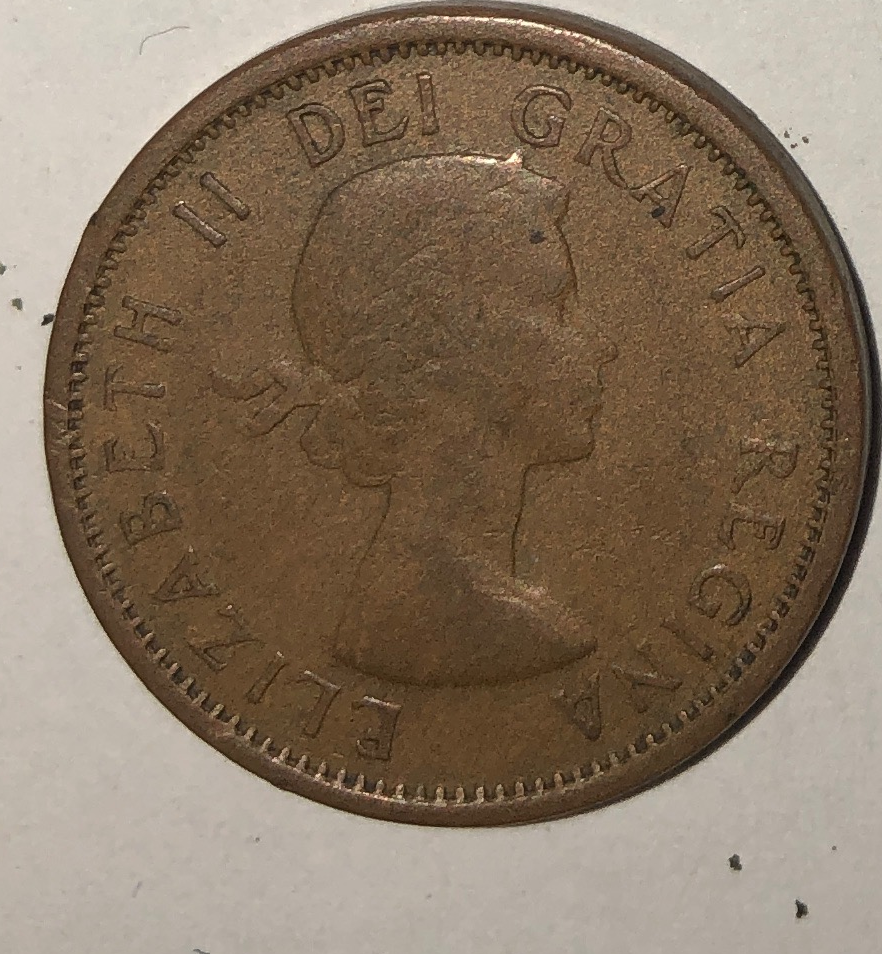

As mentioned in previous posts, I need numerous images to train an image recognition model. My goal is to have many examples of the image of Elizabeth II like the one below. To be efficient, I want to process many photographs of 1 cent coins using a program and so that program must be able to reliably find the centre of the portrait.

To crop the image I used two methods: 1. remove whitespace from the outside inward and 2. find the edge of the coin using OpenCV’s cv2.HoughCircles Function.

Removing whitespace from the outside inward is the simpler of the two methods. To do this I assume the edges of the image are white, color 255 in a grayscale image. If the mean value of pixel colors is 255 for a whole column of pixels, that whole column can be considered whitespace. If the mean value of pixel colors is lower than 255 I assume the column contains part of the darker coin. Cropping the image from the x value of this column will crop the whitespace from the left edge of the image.

for img_col_left in range(1,round(gray_img.shape[1]/2)):

if np.mean(gray_img,axis = 0)[img_col_left] < 254:

break The for loop of this code starts from the first pixel and moves toward the center. If the mean of the column is less than 254 the loop stops since the edge of the coin is found. I am using 254 instead of 255 to allow for some specks of dust or other imperfections in the white background. Using a for loop is not efficient and this code should be improved, but I want to get this working first.

Before the background is cropped, the image is converted to black and white and then grayscale in order to simplify the edges. Here is the procedure at this point.

import numpy as np

import cv2

import time

from google.colab.patches import cv2_imshow

def img_remove_whitespace(imgo):

print("start " + str(time.time()))

#convert to black and white - make it simpler?

# define a threshold, 128 is the middle of black and white in grey scale

thresh = 128

# assign blue channel to zeros

img_binary = cv2.threshold(imgo, thresh, 255, cv2.THRESH_BINARY)[1]

#cv2_imshow(img_binary)

gray_img = cv2.cvtColor(img_binary, cv2.COLOR_BGR2GRAY)

#cv2_imshow(gray_img)

print(gray_img.shape)

# Thanks https://likegeeks.com/python-image-processing/

# croppedImage = img[startRow:endRow, startCol:endCol]

# allow for 254 (slightly less than every pixel totally white to allow some specks)

#count in from the right edge until the mean of each column is less than 255

for img_row_top in range(0,round(gray_img.shape[0]/2)):

if np.mean(gray_img,axis = 1)[img_row_top] < 254:

break

print(img_row_top)

for img_row_bottom in range(gray_img.shape[0]-1,round(gray_img.shape[0]/2),-1):

if np.mean(gray_img,axis = 1)[img_row_bottom] < 254:

break

print(img_row_bottom)

for img_col_left in range(1,round(gray_img.shape[1]/2)):

if np.mean(gray_img,axis = 0)[img_col_left] < 254:

break

print(img_col_left)

for img_col_right in range(gray_img.shape[1]-1,round(gray_img.shape[1]/2),-1):

if np.mean(gray_img,axis = 0)[img_col_right] < 254:

break

print(img_col_right)

imgo_cropped = imgo[img_row_top:img_row_bottom,img_col_left:img_col_right,0:3]

print("Whitespace removal")

print(imgo_cropped.shape)

# cv2_imshow(imgo_cropped)

print("end " + str(time.time()))

return(imgo_cropped)

A problem with this method is that some images have shadows which interfere with the procedure from seeing the true edge of the coin. (See below.)

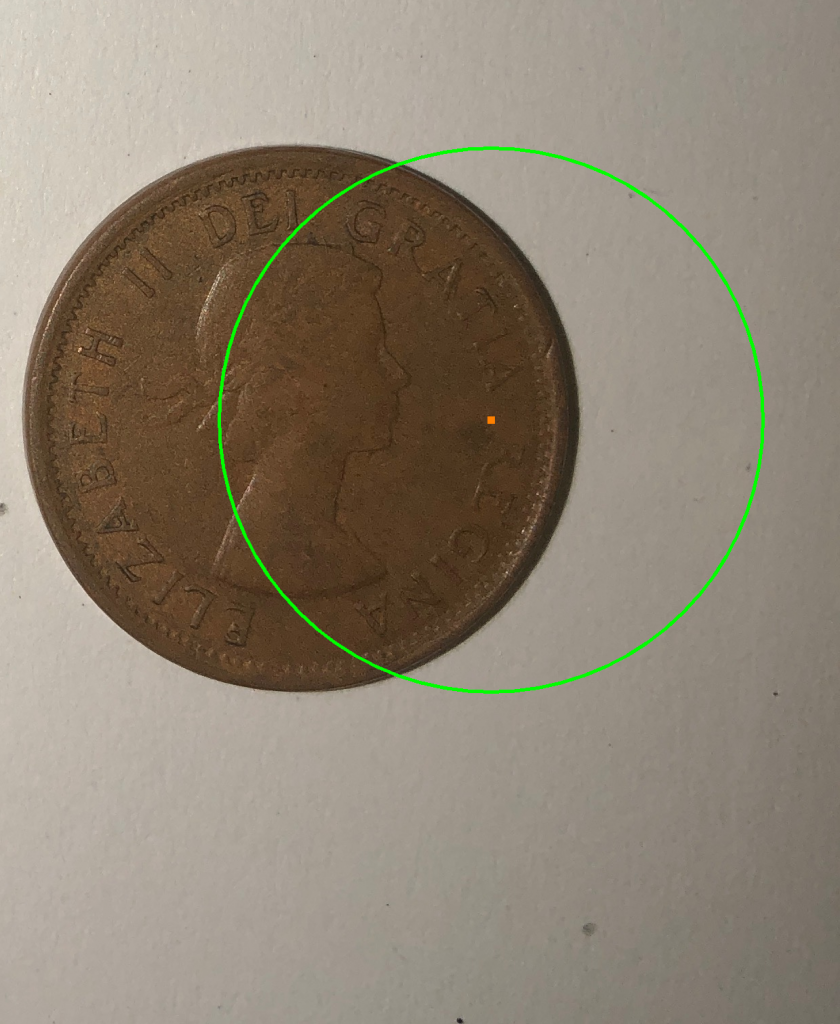

For cases like the image above, I tried to use OpenCV’s Hough Circles to find the boundary of the coin. Thanks to Adrian Rosebrock’s tutorial “Detecting Circles in Images using OpenCV and Hough Circles” I was able to apply this here.

In my case, cv2.HoughCircles found too many circles. For example, it found 95 of them in one image. Almost all of these circles are not the edge of the coin. I used several methods to try to find the circle that represented the edge of the coin. I sorted the circles by radius, reasoning the largest circle was the edge. It was not always. I looked for large circles that were completely inside the image but also got erroneous results. (See below.) Perhaps I am using this incorrectly, but I have decided this method is not reliable enough to be worthwhile so I am going to stop using it. The code to use the Hough circles is below. Warning, there is likely a problem with it.

print("Since finding whitespace did not work, we will find circles. This will take more time")

circles = cv2.HoughCircles(gray_img, cv2.HOUGH_GRADIENT, 1.2, 100)

# ensure at least some circles were found

if circles is not None:

print("Circles")

print(circles.shape)

# convert the (x, y) coordinates and radius of the circles to integers

circles = np.round(circles[0, :]).astype("int")

circles2=sorted(circles,key=takeThird,reverse=True)

print("There are " + str(len(circles2)) +" circles found in this image")

for cir in range(0,len(circles2)):

x = circles2[cir][0]

y = circles2[cir][1]

r = circles2[cir][2]

print()

if r < good_coin_radius*1.1 and r > good_coin_radius*0.9:

if (x > (good_coin_radius*0.9) and x < (output.shape[0]-(good_coin_radius*0.9))):

if (y > (good_coin_radius*0.9) and y < (output.shape[1]-(good_coin_radius*0.9))):

print("I believe this is the right circle.")

print(circles2[cir])

cv2.circle(output, (x, y), r, (0, 255, 0), 4)

cv2.rectangle(output, (x - 5, y - 5), (x + 5, y + 5), (0, 128, 255), -1)

cv2_imshow(output)

output = output[x-r:x+r,y-r:y+r]

width_half = round(output.shape[0]/2)

height_half = round(output.shape[1]/2)

cv2.circle(output,(width_half, height_half), round(r*1.414).astype("int"), (255,255,255), round(r*1.4).astype("int"))

output = img_remove_whitespace(output)

cv2_imshow(output)

return(output)

My conclusion is that I am only going to use coins from the left half of each picture I take since the photo flash works better there and there are fewer shadows. I will take care to remove debris around the coins that interferes with finding whitespace. Failing that, the routine removes photos that it can’t crop to the expected size of the coin. This results in a loss of some photos, but this is acceptable here since I need don’t need every photo to train the image recognition model. Below is a rejected image. The cleaned up code I am using right now is here.