A finding aid for the Shawville Equity.

This wasn’t an exercise for this week, but I started the week wanting to create a list of the Equity files to make it easier to navigate to follow up annual events. Last week I was looking at the annual reporting of the Shawville Fair and it took a bit of guess work to pick the right edition of the Equity. Over time, this led to sore eyes from looking at pdfs I didn’t need to and discomfort in my mouse hand.

To get a list of URLs I used WGET to record all of the directories that hold the Equity files on the BANQ website to my machine, without the files, using the spider option.

wget –spider –force-html -r -w1 -np http://collections.banq.qc.ca:8008/jrn03/equity/

ls -R listed all of the directories and I piped that to a text file. Using grep to extract only lines containing “collections.banq.qc.ca:8008/jrn03/equity/src/” gave me a list of directories, to which I prepended “http://” to make “http://collections.banq.qc.ca:8008/jrn03/equity/src/1990/05/02/”. I started editing this list in excel, but realized it would be more educational and, just as importantly, reproducible if I wrote an R script. I am thinking this R script would note conditions such as:

- The first edition after the first Sunday of November every 4 years would have municipal election coverage.

- The annual dates of the Shawville and Quyon fairs.

- Other significant annual events as found in tools such as Antconc.

That’s not done yet.

Using R.

I am excited by the power of this language and have not used it before. I also liked seeing how well integrated it is with git. I was able to add my .Rproj file and script in a .R file and push these to GitHub.

The illustrations below come from completing Dr. Shawn Graham’s tutorial on Topic Modelling in R.

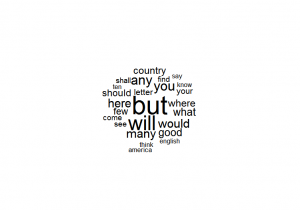

Here is a result of using the wordcloud package in R:

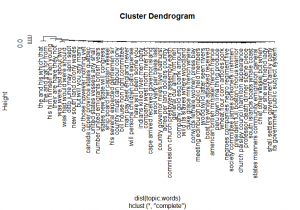

Below R produced a diagram to represent words that are shared by topics:

Here is a beautiful representation of links between topics based on their distribution in documents using the cluster and iGraph packages in R:

I can see that the R language and packages offer tremendous flexibility and potential for DH projects.

Exercise 5, Corpus Linguistics with AntConc.

I loaded AntConc locally. For the corpus, at first, I used all of the text files for the Shawville Equity between 1900-1999 and then searched for occurrences of flq in the corpus. My draft results are here.

When I did a word list, AntConc failed with an error, so I decided to use a smaller corpus of 11 complete years, 1960-1970, roughly the start of the Quiet Revolution until the October Crisis.

Initially, I did not include a stop word list, I wanted to compare the most frequently found word “the” with “le” what appears to be the most frequently found French word. “The” occurred 322563 times. “Le” was the 648th most common word in the corpus and was present 1656 times.

The word English occurred 1226 times, while Français occurred 20 times during 1960-1970.

Keyword list in Antconc.

To work with the keyword list tool of AntConc I used text files from the Equity for the complete years 1960-1969 for the corpus. For comparison, I used files from 1990-1999. I used Matthew L. Jockers stopwords list as well. In spite of having a stopwords list, I still had useless keywords such as S and J.

Despite this, it is interesting to me that two words in the top 40 keywords concern religion: rev and church and two other keywords may concern church: service and Sunday. Sunday is the day of the week that is listed first as a keyword. Is this an indicator that Shawville remained a comparatively more religious community than others in Quebec who left their churches in the wake of the Quiet Revolution?

Details of these results are in my fail log.

Exercise 6 Text Analysis with Voyant.

After failing to load a significant amount of text files from the Shawville Equity into Voyant, instead I put them into a zip files and hosted them on my website. This allowed me to create a corpus in Voyant for the Shawville Equity. I was able to load all of the text files from 1960-1999, but this caused Voyant to produce errors. I moved to using a smaller corpus of 1970-1980.

Local map in the Shawville Equity.

I tried to find a map of Shawville in the Shawville Equity by doing some text searches with terms like “map of Shawville”, but I did not find anything yet. If you find a map of Shawville in the Equity, please let me know.

Conclusion.

This was an excellent week of learning for me, particularly seeing what R can do. I would like more time to work with the mapping tools in the future.