I have a few dozen pictures of Elizabeth II from Canadian 1 cent pieces. I want to see if I can train a model that can in-paint a partial image. Haiyan Wang, Zhongshi He, Dingding Chen, Yongwen Huang, and Yiman He have written an excellent study of this technique in their article “Virtual Inpainting for Dazu Rock Carvings Based on a Sample Dataset” in the Journal on Computing and Cultural Heritage. [1]

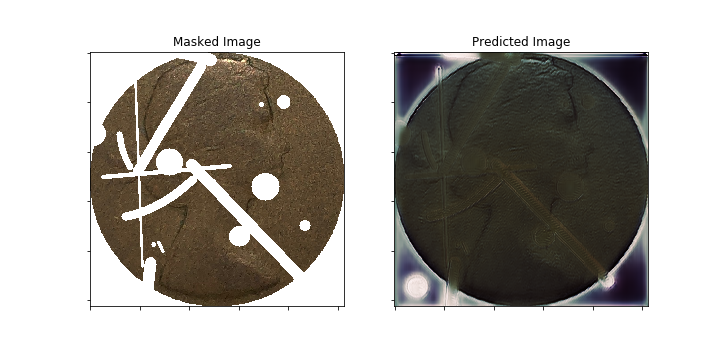

I am using Mathias Gruber’s PConv-Keras repository https://github.com/MathiasGruber/PConv-Keras in GitHub to do image inpainting. He has impressive results and as a caveat for my results, I am not yet training the model used for inpainting nearly as long as Gruber does. I am using Google Colab and it is not meant for long running processes so I am using a small number of steps and epochs to train the model. Even with this constraint I am seeing potential results.

The steps used to setup Mathias Gruber’s PConv-Keras in Google Colab are here. Thanks to Eduardo Rosas for these instructions so I could get this set up.

Using Gruber’s PConv-Keras I have been able to train a model to perform image inpainting. My next steps are to refine the model, train it more deeply and look for improved results. The code and results I am working on are on my Google Drive at this time. The images I am using are here.

This week I improved the program to process coin images as well. I see improved results by having a higher tolerance of impurities in the background of the picture when finding whitespace. (I use white_mean = 250, not 254 or 255) This version is in GitHub.

1 Wang, Haiyan, Zhongshi He, Dingding Chen, Yongwen Huang, and Yiman He. 2019. “Virtual Inpainting for Dazu Rock Carvings Based on a Sample Dataset.” Journal on Computing and Cultural Heritage 12 (3): 1-17.